As artificial intelligence becomes increasingly powerful, the need for ethical frameworks has never been greater. This guide explores the key principles of ethical AI and how developers, businesses, and policymakers can implement them in practice.

Insight

This article contains exclusive research from our AI ethics advisory board.

What Is Ethical AI?

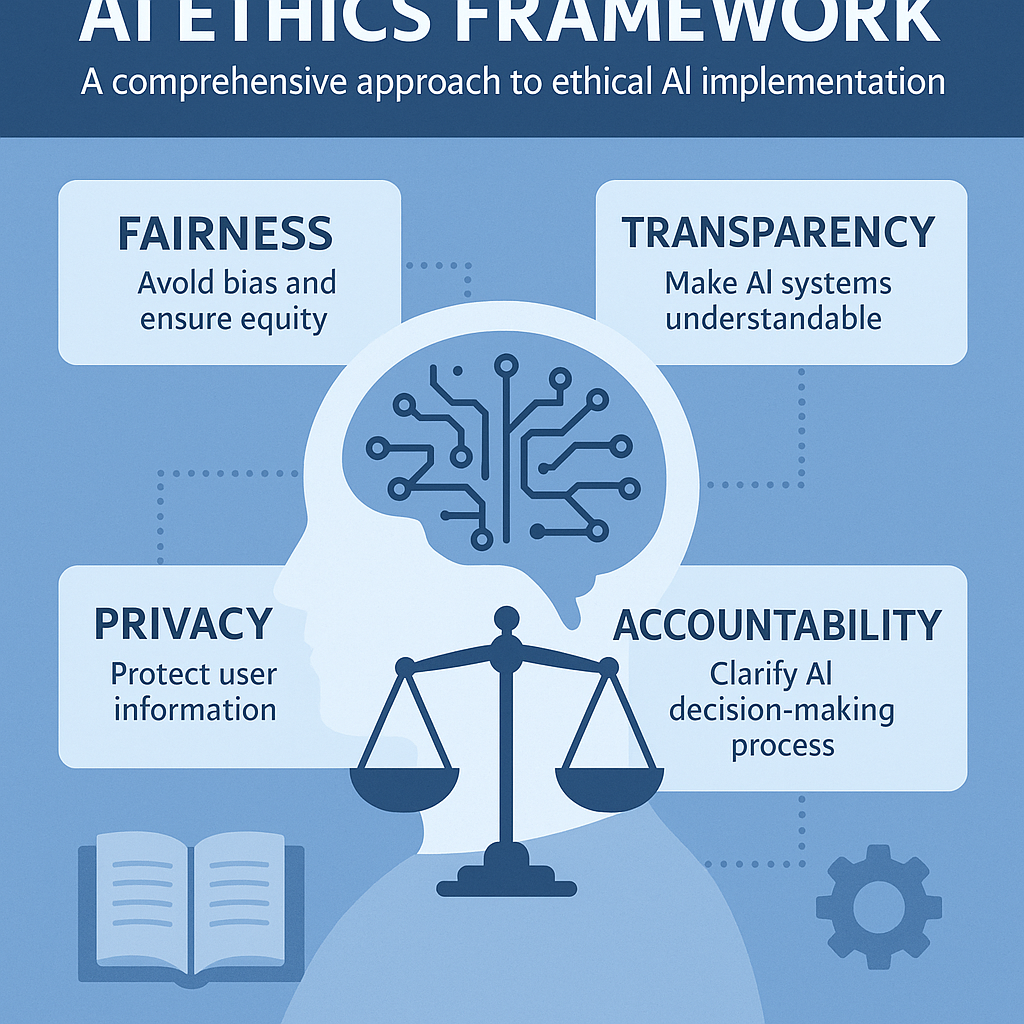

Ethical AI refers to the development and deployment of artificial intelligence systems that align with moral principles and societal values. It encompasses fairness, accountability, transparency, and respect for human rights throughout the AI lifecycle.

"We must ensure AI systems are not just intelligent, but also aligned with human values and societal good."

Why Ethical AI Matters

From biased algorithms to privacy concerns, AI systems can cause significant harm if not developed responsibly. Ethical AI practices help prevent discrimination, protect user rights, and maintain public trust in emerging technologies.

Core Principles of Ethical AI:

- Fairness: Systems should avoid biased outcomes and treat all users equitably.

- Transparency: Users should understand how AI decisions are made.

- Privacy: Protect user data and maintain confidentiality.

- Accountability: Clear responsibility for AI system outcomes.

- Safety: Systems should be reliable and secure.

Implementing Ethical AI in Practice

Here are practical approaches organizations are using today:

Diverse development teams to reduce unconscious bias

Ethical review boards for AI projects

Bias detection and mitigation tools

Clear documentation of data sources and methods

Ethical Challenges in AI Development

Common dilemmas faced by organizations:

Algorithmic Bias

Addressing historical biases in training data

Privacy Protection

Balancing data utility with individual privacy

Autonomous Systems

Establishing accountability for AI decisions

Global Standards

Developing consistent ethics frameworks across borders

Related Reading

The Future of Ethical AI

As AI capabilities grow, so does the importance of ethical considerations. The organizations that prioritize responsible AI today will be the industry leaders of tomorrow, building trust with users and regulators alike.

FAQs

Do I need a formal AI ethics policy?

Yes. A concise, written policy clarifies responsibilities, review steps, and escalation paths. It also helps with audits and regulatory requests.

How do we detect bias in our models?

Start with representative evaluation datasets, measure disparate impact across cohorts, and run counterfactual tests. Log metrics per release and review trends over time.

Is open-source data safer for compliance?

Open-source licenses help with usage rights, but you still need to assess privacy risks, consent provenance, and applicable data protection laws.

Who is accountable when AI makes a mistake?

Accountability remains with the organization shipping the system. Assign an owner, require human-in-the-loop for high-risk actions, and keep auditable decision logs.

✨ Try Our Free AI Tools

Remove image backgrounds or create passport photos in seconds — 100% free, no signup required.

Want to stay updated on AI ethics?

Bookmark RealRevi.net for upcoming newsletter for the smartest tools, tricks, and tutorials in AI and creative technology.

Back to Blog